TVM Learning (9)-GPU and Hardware Acceleration, Part 2

Key Elements of Specialized Code

下面用 low-level numpy 写的 python 代码展示了一系列在专用硬件后端可能使用到的操作。

1 | |

我们假设基础的运算单元可以进行 16x16的矩阵乘法 (accel_tmm_add),接收2个寄存器里的 RHS 输入和表示累加中间结果的 LHS 输入,数据拷贝使用的是专用函数 (accel_dma_copy).

1 | |

A Block with Tensorized Computation

专用加速器代码的结构并非以标量计算为单位。迄今为止,我们运行的大多数 TensorIR 代码都包含一个 block,用于计算输出张量中的单个元素。许多专用加速器在张量区域内进行计算。TensorIR中的 block 可以帮助我们将这些相关计算分组。

1 | |

调用 MatmulBlockModule.show() 后显示的 TensorIR如下

1 | |

该代码从 A 和 B 的 16x16 区域读取数据,并写入 C 的 16x16 区域。在这种情况下,块的内容包含子区域计算的具体实现的进一步细节。我们称这种区块为张量区块,因为它们包含跨越张量子区域的计算。

1 | |

Transforming Loops Around Tensorized Block

我们可以对张量计算块的循环进行变换,这些循环变换可以重新组织计算该块的迭代方式,得到不同的张量程序。

1 | |

Blockization – Creating Tensorized Blocks

TensorIR 提供了一种变换原语 blockize 来将循环的子区域组合在一起以形成张量化的计算 block. 例如我们可以将下面2个的 1024x1024 矩阵乘法分解成很多个 16x16 的矩阵乘法。

1 | |

blockize 是用来将一个或多个块(block)或一个特定循环的子树合并成一个新的块。如果 target 是一个循环的根节点,则会将该循环下的所有块合并成一个新块,如果 target 是一个块的列表,则会将这些块合并成一个新块。然后将新块返回

参数说明 :

target: 需要被合并的块或循环的根节点。可以是LoopRV类型(表示一个循环)或List[BlockRV]类型(表示多个块)。preserve_unit_iters: 一个布尔值,表示是否保留块绑定中的单元迭代器。

限制条件 :

blockize要求给定的循环下只有一个块,且该块的绑定必须能够被该循环的子空间整除。

调用 blockize 后的 TensorIR 如下

1 | |

Transforming TensorIR to Introduce Special Memory Scope

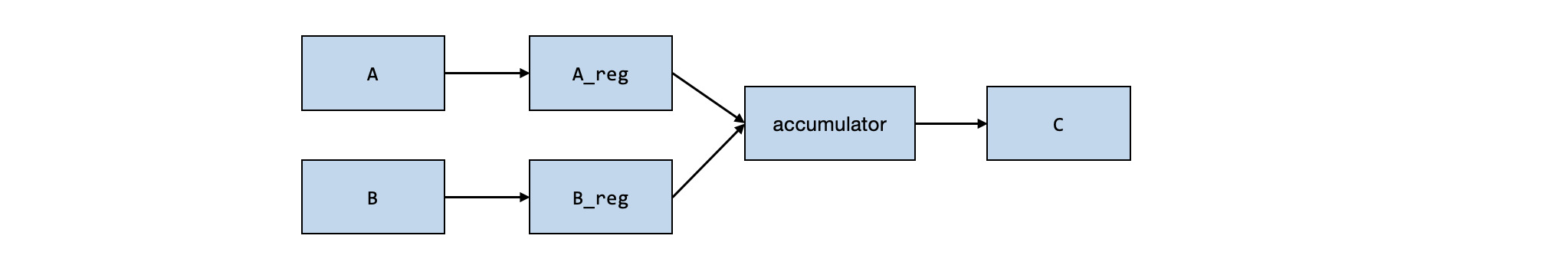

正如在 low-level NumPy 代码中提到的,底层 TensorIR 的一个关键要素是加速过程中使用的特殊内存范围。我们可以使用 cache_read 和 write 来创建中间内存阶段。

storage_scope 在这里指的是内存存储范围或存储层次。常见的存储范围包括:

- global: 表示数据存储在全局内存中。这是最高层次的内存范围。

- shared: 表示数据存储在GPU的共享内存中。

- local: 表示数据存储在CPU或GPU的寄存器中。这是最底层的内存范围。

global.A_reg 表示数据将被缓存到一个名为 A_reg 的全局内存缓存中。

1 | |

Tensorization

现在我们已经创建了一组映射到 TensorIR 中相应计算阶段的块。剩下的步骤是映射部分张量块,以使用映射到硬件加速指令的特定实现。这一映射过程称为张量化。为了实现张量化,我们首先注册一个 TensorIntrin,其中包含计算和实现的描述。

1 | |

首先我们用 decompose_reduction 将 C_global_accumulator 的初始化和更新部分分开成 T.block("matmul_init") 和 T.block("matmul_o_update")

1 | |

然后我们调用 tensorize,将 block_mm(对应于 matmul_o_update block )映射到 tmm16_impl. 这里我们使用 T.call_extern 来调用环境中的外部函数。 下游编译步骤可以轻松地将实现映射到实现操作的指令。或者我们可以将 tmm16 映射到实现这种张量化计算的微内核。 以下代码显示了如何通过外部 C++ 代码执行此操作。

具体实现步骤如下:

- 定义 C++ 风格的

tmm16函数: 这个函数实现了一个 16x16 矩阵乘法的计算逻辑。它接受三个输入张量aa、bb和cc,以及对应的步长stride_a、stride_b和stride_c。函数使用三重循环执行矩阵乘法的计算,将结果累加到cc张量中。 - 使用 TVM 的

clang模块将 C++ 代码编译为 LLVM IR 代码: 首先创建一个临时目录temp用于存储生成的 LLVM IR 文件。然后调用clang.create_llvm()函数,传入 C++ 代码字符串cc_code。create_llvm()函数会将 C++ 代码编译为 LLVM IR 代码,并保存到ll_path指定的文件中。最后返回生成的 LLVM IR 代码。

1 | |

调用 sch.tensorize(block_mm, "tmm16")报错,原因未知。

1 | |